Achieving unrivaled performance with media and video streaming on demand

Media Published on •4 mins Last updatedBack in 2018, our company founder said to me:

If I were building the next Netflix, I'd use layer 4 DR mode.

That stuck with me, partly because digital media and streaming is a fascinating subject. But why is our much vaunted "DR mode" so well suited to streaming media?

Recap:

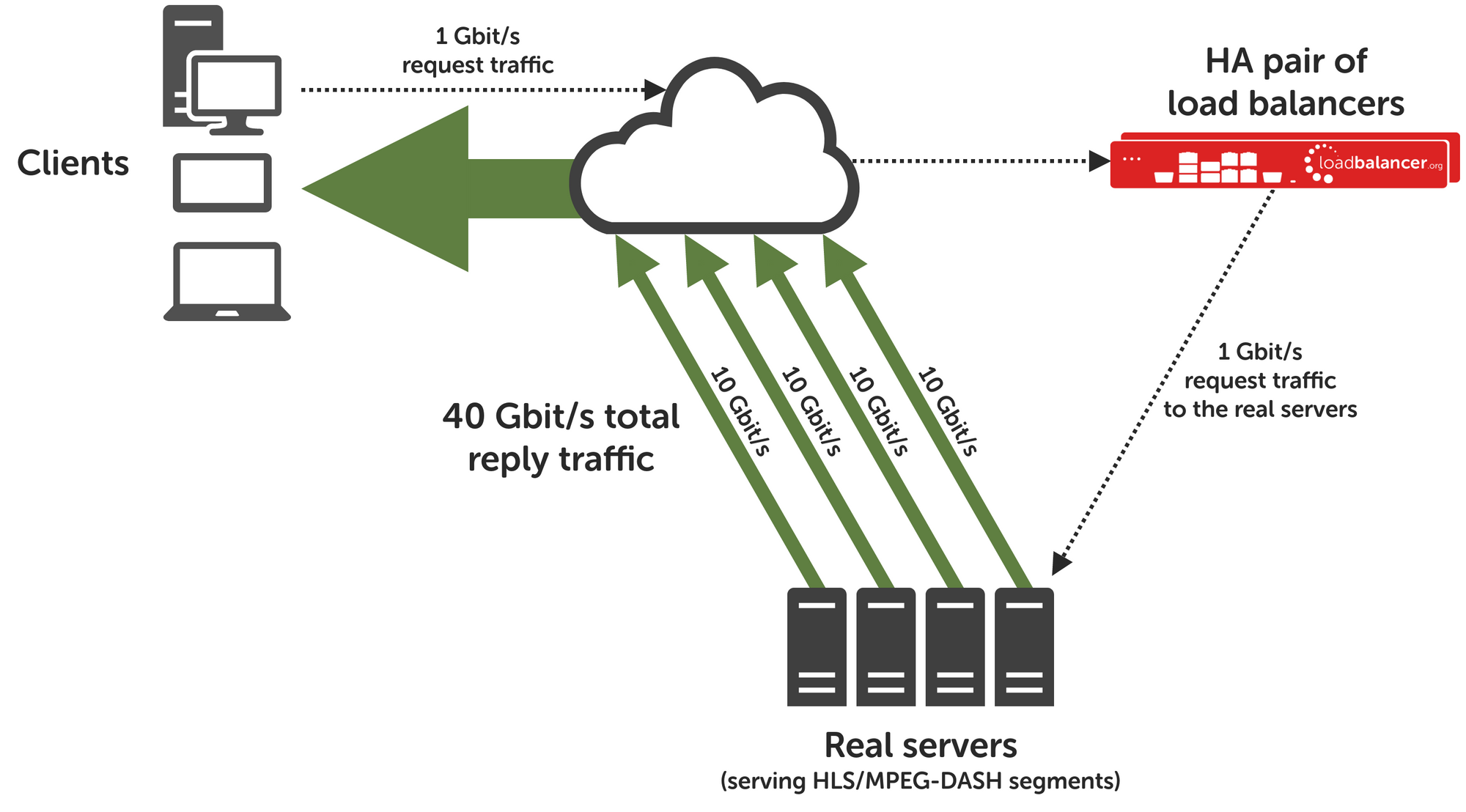

For the uninitiated, layer 4 DR mode is a high performance load balancing method available on our appliances. It works by having all response traffic flow from the servers straight back to the clients. The load balancer is bypassed on the return journey, saving an extra hop and removing the possibility of the load balancer being a bottleneck for response traffic. This is an effective way to load balance connections where the volume of response traffic eclipses the request traffic: a perfect fit for streaming media.

(We've written extensively about layer 4 DR mode in the past. For more information, see these blog posts or the video below.)

The Streaming Media Use Case

Let's look specifically at streaming media, either live broadcasts or video on demand (VOD).

One common approach is to use a CDN to handle last-mile delivery, whereby you stream to your CDN provider and then they distribute your stream to various endpoints within their network. You might do that if you were streaming to a geographically diverse audience and needed to keep latency low. There are scenarios where a CDN might not be in use, however, and you're serving content directly yourself. For example, you might be serving up HLS or MPEG-DASH streams directly from your own server.

How Load Balancing Helps

Load balancing streaming media servers provides two key benefits: scalability and high availability.

Scaling and Capacity

A single server can only reliably accommodate a limited number of end users. Assuming that the main limiting factor is network bandwidth, let's look at two example streams and see how many unique clients a server could theoretically simultaneously support over a 10 Gbit/s connection to the clients (e.g. to the public internet).

| Stream Type | Approximate Average Bitrate (Mbit/s) | Theoretical Maximum Number of Streams over a Single 10 Gbit/s Connection |

|---|---|---|

| 1080p Twitch stream | 6 | 1666 |

| 4k YouTube video | 17 | 588 |

Those figures make lots of assumptions, such as zero overhead at the stream/application level (not true), zero overhead at the network level (also not true), and perfect, continuous end-to-end transmission (definitely not true). In reality, the maximum number of streams would be lower.

Layer 4 DR mode load balancing brings practically infinite scaling to the table. Load balancing allows multiple servers to operate together in a cluster, and capacity can be scaled up as needed (a growth in end users = add another server).

The reason that DR mode is so perfectly suited to streaming media is that the reply traffic flows from the servers straight back to the clients, without the load balancer in the way to potentially act as a bottleneck. With streaming media, the request traffic is small ("send me stream X") while the reply traffic is enormous (the entirety of "stream X"), so taking the load balancer out of the picture on the return path is a huge boon for scalability.

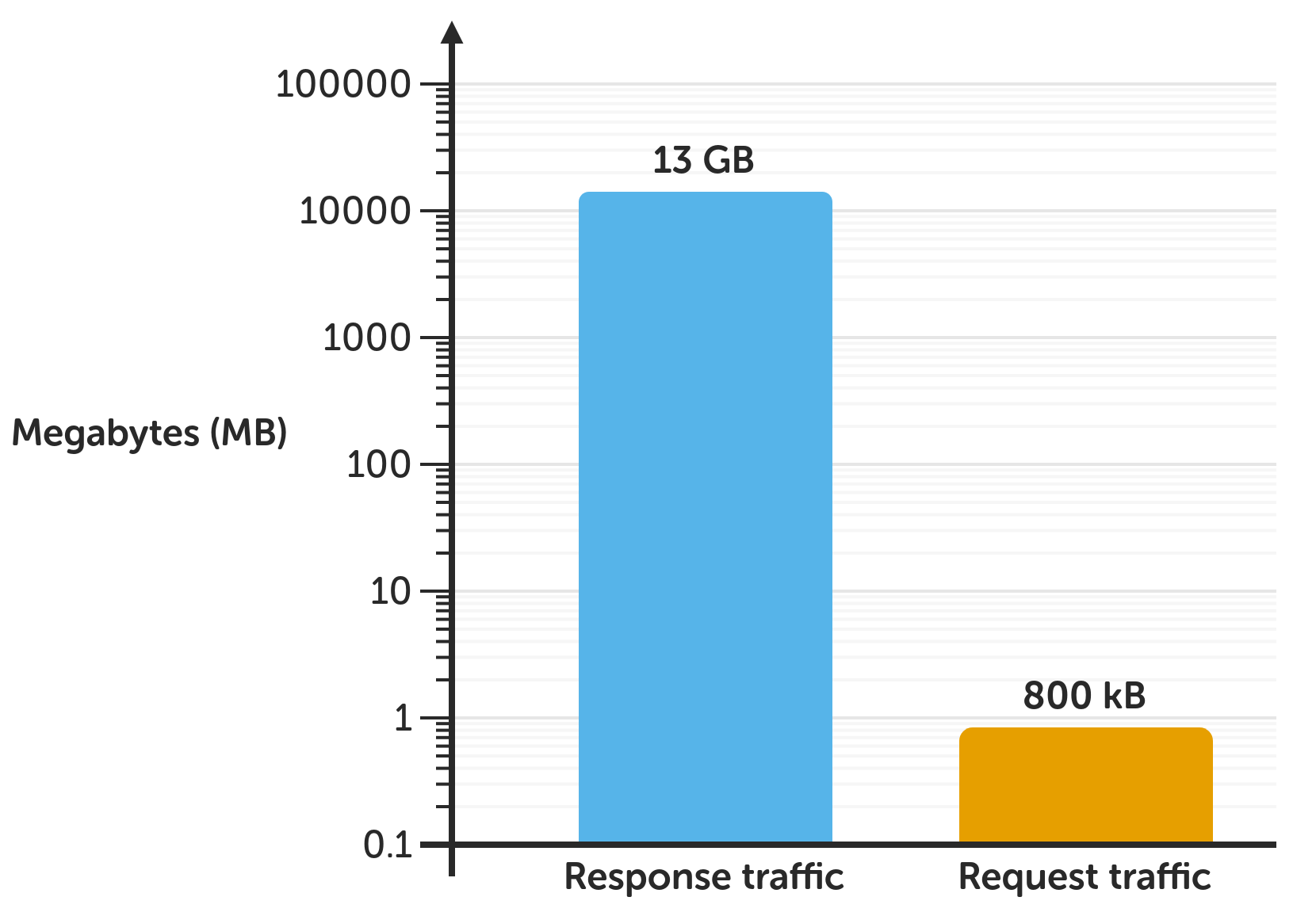

Let's look at a real-world VOD example: comparing the response and request traffic from viewing a 4½ hour-long Twitch stream.

13 GB of media was received from the streaming server, with the sum of all request traffic only amounting to a mere 800 kB. Response traffic was more than 16000 times larger than request traffic: that's more than four orders of magnitude more response traffic than request traffic. This is why having the real servers respond directly to the clients is such an enormous benefit from using layer 4 DR mode load balancing: there's a huge amount more response traffic to deal with when it comes to streaming media.

High Availability (HA)

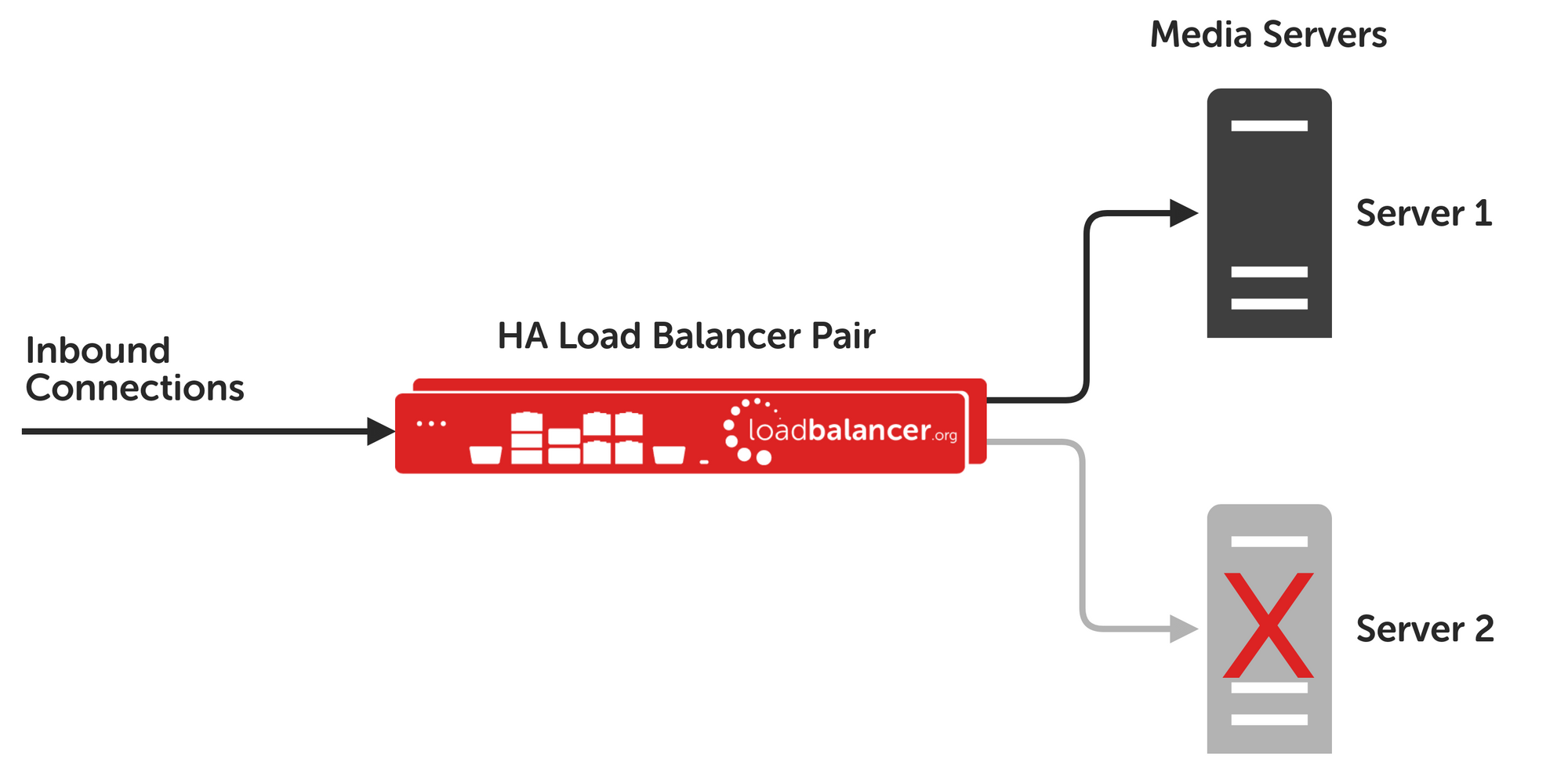

If you're relying on a single media server for all your streaming needs, what happens when that server suffers downtime? Even if it's for planned maintenance, server downtime means a service outage for clients. The worst-case scenario is you have a solo server and it falls over in the middle of streaming a live event. That's probably not going to be acceptable to your end users.

The other big benefit of load balancing and having a cluster of servers is that the service becomes highly available. If a single server becomes unavailable then it's not a big problem, as long as there are other servers still online and healthy in the cluster (and if all servers are down then you've got bigger problems!).

To prove the point, I've put together a short demonstration. The same video is streamed twice: the first stream direct from the server, and the second stream via a layer 4 DR mode load balanced service. The load balanced stream never misses a beat, even during a simulated server failure: all traffic is redirected to the remaining healthy server, with no interruption to the client (the browser video player):

DR Mode: Practically Built for Streaming Media

Layer 4 DR mode is an excellent solution for making streaming media applications endlessly scalable and highly available. It can handle huge volumes of network traffic with ease and is ideally suited to use cases like streaming media, where the volume of response traffic is significantly greater than request traffic. Our appliances make it easy to implement load balancing for media servers to gain the benefits it provides.