Why 2022 will see a greater reliance on high availability

High Availability Published on •4 mins Last updated!!WARNING!! This blog contains the generic phrase "IT modernization" which some of our readers may find distressing.

How things changed in 2021

2021 was the year of accelerated IT modernization (bear with me...). More specifically, IT teams were under intense pressure to adapt or upgrade their platforms, software, and technology in order to deliver:

- More personalized services, across both the public and private sector

- More scalable infrastructure, to accommodate exponential data growth

- More secure data storage, as we saw a rise in ransomware attacks on more vulnerable organizations such as universities

- More environmentally friendly procurement practices, furthering the shift from hardware to virtual

- More cloud-first strategies, requiring the replacement, re-platforming, or re-architecting of named applications.

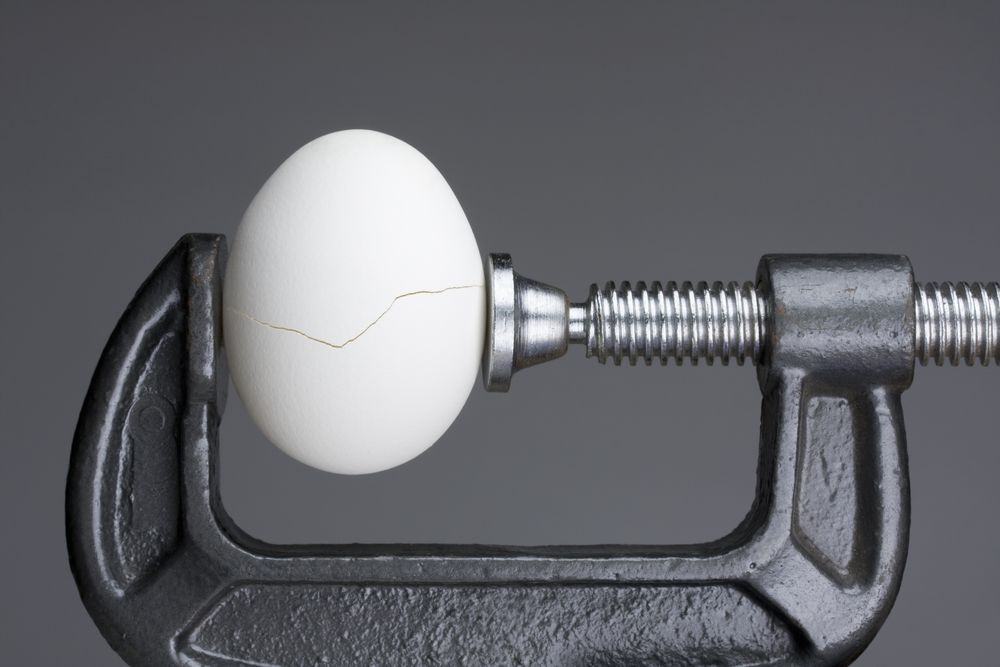

All of this has led to an accelerated demand for applications that are more resilient, scalable, and agile. But while IT modernization brings with it many benefits, it is not without risk.

The key architecture risks of modernization

While many of these applications are very reliable on their own, adding layer upon layer of increasingly complex, disparate, whizzy applications on top of legacy IT infrastructure, introduces vulnerabilities that weren't there previously. This all undermines availability.

For example:

1. Some architecture may be too fragile to change - Either because there is no longer the knowledge internally to make these changes, or because changes cannot be made in isolation without breaking things elsewhere.

2. It is difficult to extend the functionality of legacy systems - Modernization is an inevitably gradual process, so marrying modern with legacy 'spaghetti code' infrastructure can be extremely challenging. There may be a lack of clear boundaries in the code base between technical and functional areas (unlike modern systems which typically have more service-oriented architecture). This all limits what is and isn't possible.

3. Maintenance may be more complicated - Applications may be so critical they may no longer be able to be taken offline or distributing maintenance effort may be a lot harder to do.

4. Isolating change is harder - For example, if data is held in a single database, for example, modifications to the data structure will likely result in a knock-on series of changes to other areas that rely on it.

5. Unsupported technology - Obsolete technology may no longer be supported, which means a greater risk to continuity if things are changed.

These risks all need to be mitigated with high availability solutions.

What this means for 2022

While availability may have been assumed in the past, and a degree of downtime seen as a necessary evil, this is no longer the case. In fact, this realization has led to a greater urgency to simplify and de-risk IT infrastructure, not least in healthcare where the web of modern and legacy applications is now so complex IT teams recognize that existing frameworks are undermining reliability.

Furthermore, with an increasing number of applications being moved to the cloud, the risks there must also be mitigated if high availability is to be guaranteed, for the following reasons:

Firstly, cloud infrastructure is not indestructible.

It is still based on physical hardware somewhere on the planet, and while the cloud is considered to be secure, there have been some major disasters, and outages are actually quite common.

Research shows that, on average, cloud outages result in 7.74 hours of downtime per service per year.

Amazon Web Service’s 2011 EC2 outage is an example of one of the largest cloud disasters, resulting in several days of service failures and downtime, causing major headaches for IT teams globally. Since then there have been less severe, but increasingly frequent outages. In 2021 alone the following all experienced cloud service disruption: IBM, Microsoft Azure, AWS, and GCP - preventing customers from accessing their accounts, services, projects, and critical data for many long, painful hours.

Not all cloud providers have the same ability to scale. And the more customers use their physical hardware, the greater the risk of failure. If we've learned one thing from history, it's that no one is too big to fail. Thankfully, the larger service providers now have a number of measures in place to prevent catastrophic failures. However, poor disaster recovery processes at the user end can also cause problems if an outage does happen.

Secondly, organizations shouldn't put all their trust in a provider they don't have control/visibility of.

Although a cloud-first strategy may seem like the answer, organizations should not become complacent. Cloud computing still requires third-party testing of your software to ensure it remains secure, and that there are no weak spots in your software for script manipulation or cross-site script abnormalities.

As more and more organizations consider increasing their workloads in the cloud, these security and compliance responsibilities should not be underestimated.

Thirdly, when moving to the cloud it is important to adopt the high availability approach most suited to your environment.

It is important to build for high availability and re-architect your platforms. However, increasingly we see customers preferring to take a 'lift and shift' approach, moving their applications directly to the cloud because it is the best, or only, option available to them. In this instance, a platform-agnostic load balancer will offer a more logical high availability solution than a cloud-native load balancer.

A cloud-native load balancer can certainly work well as a replacement for any on-premise equivalent. But, if you must keep things configured as they are, moving your existing load balancer to the cloud may make the most sense as it offers the exact same features and functionality, without the need to re-engineer anything or learn, test, and integrate new software.

So if you have critical applications or services you need to provide in 2022, then it might be worth looking again at the availability not just of your applications, but also of your end-to-end workflows.