As you know, we get excited about solutions, not semantics, so I'm going to go against the grain here and say 'Application Acceleration' is one of those buzzwords that's about as useful as all that designer water that's out there these days...

So when people ask about Application Acceleration, what do they really want?

To start with, modern applications are pretty fast already

They do have some common bottlenecks though. For example...

WAN Acceleration

Most enterprise networks have slow internal internet or WAN links - WAN accelerators are mostly about reducing the cost of these links by compressing the data that travels across them. In order for this to work you need a WAN Accelerator at BOTH ends of the link.

Cloud Edge Caching and Acceleration

Your customers on the Public Internet can be anywhere in the world, so it's a common requirement to store large pieces of static data (i.e. pictures and video) as close as possible to the customer's location. Services like Cloudflare and Amazon Route 53 can help you with this in a very cheap way (very detailed technical blog about edge performance from Cloudflare here: "Improving Origin Performance for Everyone with Orpheus and Tiered Cache").

So what about load balancers?

Well, they actually make your application a tiny bit slower. They add another hop in the chain and a tiny bit of latency. Then why the hell does everyone use them?!

Well, they obviously give you reliability, maintainability, and scalability. And scalability does technically give you performance i.e. add more servers to the cluster and it gets faster...

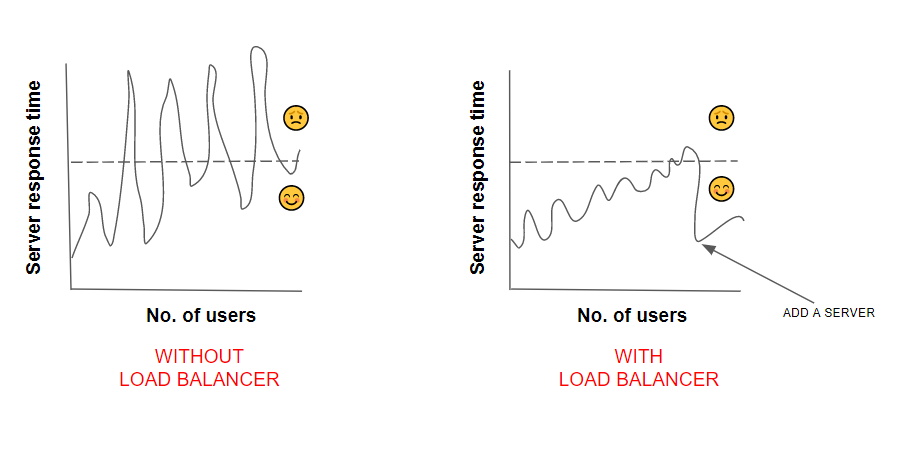

But the real golden nugget is the fact that customers don't want it faster. What they want is CONSISTENCY.

They want EVERY transaction to be approx 1 second...And they HATE it if 3 clicks can happen in one second, and then suddenly 1 click gets queued for 16 seconds (or worse dropped or errored!!). It's like no buses for an hour, then 3 at once.

Load balancers help enormously by leveling the load on each application server so you get a CONSISTENT response:

They also monitor the health of each application server to ensure customers never get an ERROR or dropped transaction.

And finally, you'll notice I did not mention...

SSL Acceleration

This used to be really important in the 1990's when servers were slow. Your application servers weren't able to handle lots of customers using HTTPS/SSL. The initial connection to a secure server takes quite a bit of CPU power, so people would use a load balancer with a special hardware chip for acceleration.

However, advancements in CPU technology have enabled significant performance gains in SSL acceleration, negating the need for specialized hardware cards.

If you look at the numbers for an average single modern server with the new EC style certificates, a modern server should be able to do 20,000 new SSL Connections per second. And, given an average cache time of 5 minutes, you get 6 million concurrent users ( 20,000 * 60 * 5 = 6,000,000 per server).

Have you got that many customers per day?!

OK, so I may be slightly simplifying things

Things like latency, application performance, server load, and monitoring can get really complex. But if you're interested, it's well worth diving into the technical weeds. So I've highlighted here some useful sources for further information...

Tyler Treat has a detailed blog on the 99th percentile myth: "Everything you need to know about latency is wrong". And here's evidence from our friends at HAProxy that load balancers reduce latency even at extreme load: "HAProxy forwards over 2 million HTTP requests per second on a single arm-based AWS Graviton2 instance."