Let me start by saying that there are a whole host of factors that will influence your choice. However, what I've tried to do here is pull together some key questions you'll need to think about, based on my personal experience, to help you articulate what you need.

And if you don't have time to read on, feel free to take our guide away with you:

Step One: What route are you going down?

Do you want hardware, virtual or cloud?

- Hardware - With hardware, having dedicated resources allows you to know that the applications you have on there will not be contending for resources with other systems/services.

- Virtual - If you want virtual, then you need to consider what resources you can allocate. To avoid a noisy neighbor situation, you need to make sure you have the virtual infrastructure (CPUs/RAM etc) to run the application on your hypervisor. This can however be difficult to predict due to the number of variables so with virtual, you may not know where you limit is until you hit it.

- Cloud - If you want cloud, then you'll need to consider the specifics of running it on your chosen platform and how well that platform can integrate with your other systems.

Are you migrating from hardware to a virtual appliance?

As a rough guide, the overheads of virtualization usually result in an approximate performance loss or overhead of around 10%. Aside from that, the specification for network interfaces, Central Processing Unit (CPU) and memory can be matched between the two. It’s worth noting that our Freedom License allows you to migrate across different platforms easily, quickly and without any financial penalties — and also back again if you need.

Step Two: What about your applications?

How many applications do you need to load balance?

The more applications a load balancer needs to support, the greater the resources required. This is particularly relevant if your users are likely to be using more than one of these applications as part of their work. The use of persistent connections will likely mean a higher connection count needs to be supported and, if Transport Layer Security (TLS) is used, a higher number of TLS Transactions Per Second (TPS) will likely require additional resources such as RAM and CPU. Having said that....although there can be a temptation with a big, powerful Application Delivery Controller (ADC) to load balance multiple applications — a per app approach is usually recommended for flexibility and resilience. Dedicated solutions are also often easier to configure, manage and maintain — making them less likely to be compromised by human error.

What types of application are you working with?

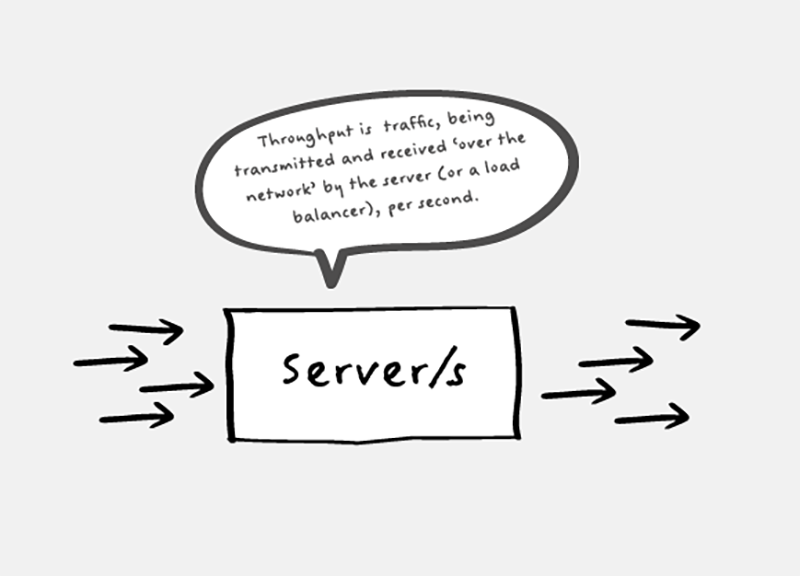

This is relevant because complex web sites and storage services are very likely to require greater resources. A complex website will likely require more connections, session persistence records, HTTPS Requests Per Second (RPS), TLS Transactions Per Second (TPS) and throughput than a simple one. A storage service simply has higher throughput. Check out our comprehensive A-Z of applications and load balancing deployment guides to get a sense for your application's unique needs.

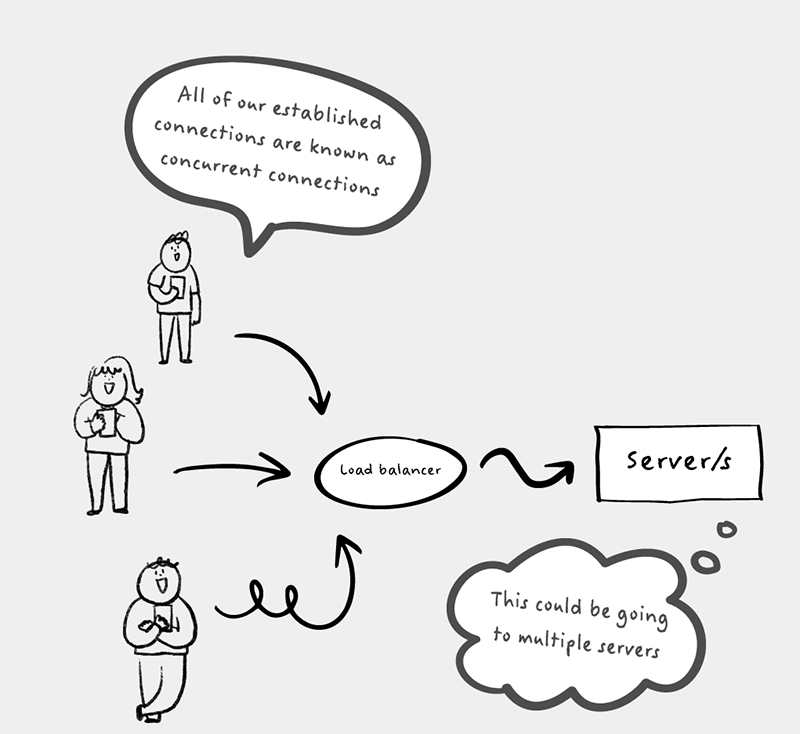

How many concurrent users does each application support?

The higher the number, the more significant the answer to the previous question becomes, particularly if Transport Layer Security (TLS) is being used. Remember also to take into account growth and peak estimates.

Step Three: What about throughput?

What growth do you expect?

Always factor in the likelihood that the number of users of an application will grow, and that their active use of an application may also increase. How you anticipate this growth will depend on many factors, such as customer growth, company and staff growth, sales and marketing activity and more.

What and when are your traffic peaks?

Don’t rely on averages for any of the throughput or connection metrics you might rely upon as you need to support the inevitable peaks that occur over time — be it daily, weekly or something else. For example:

- Do a large number of users login in the morning or after lunch

- Are users likely to make greater use of the application as a deadline approaches? Perhaps completing a weekly time-sheet or tasks related to month-end or even a seasonal holiday?

- Could a marketing campaign, a new feature or a change in company policy result in a spike of users?

What is the throughput required?

In other words, how much traffic is going to be passing through the load balancer? This may require some analysis and monitoring to determine. If you are migrating from an existing load balancer it probably won’t be too hard to find out what the peak overall throughput it currently supports is. Although, if you are launching a new service you’ll likely need to do some testing based on the anticipated peak number of concurrent users.

Could you use Direct Server Return (DSR)?

Direct Server Return (DSR) is a method of load balancing where inbound requests are load balanced but return traffic is sent directly to the client bypassing the load balancer. This greatly reduces the throughput demands on the load balancer and allows for a far greater number of clients to be supported. Consider using DSR where an application has high throughput demands and you do not need Layer 7 processing (such as TLS termination or cookie persistence), or Web Application Firewall (WAF) features.

What types of VIP?

Layer 4 VIPs (virtual IP addresses) consume far fewer resources than Layer 7 ones, and HTTPS RPS, TLS TPS and other factors can therefore be ignored. However, connections and throughput cannot.

Step Four: What about security?

Are you using the Web Application Firewall?

A Web Application Firewall (WAF) adds a significant performance load that you should accommodate for when considering CPU and memory requirements. How much will depend on your specific configuration.

Step Five: Putting it all together

Your requirements

Below is a template to record the requirements identified above, to facilitate conversations with colleagues and vendors.

Then, when you're ready, you should then be able to select the relevant product from the vendor's product table much more easily.

And, finally....

It probably goes without saying, but if you want to avoid introducing a single point of failure (which was probably kinda the whole point!), don't forget you'll need to have TWO load balancers to create a clustered pair. So, once you've found the right product, remember to factor this in when you're crunching the numbers!!!