Have you ever wondered if LVS works in AWS? I can already say for certain that LVS-NAT works great - but what about LVS-DR and LVS-TUN?

Having spent the last several months playing in AWS, I'm writing this blog mostly to record my findings. I was trying to get LVS to work inside AWS in any mode other than LVS-NAT. NAT mode works brilliantly and provides a solution for most applications - you can relax source and destination checks on instances, making it easy to implement even across availability zones with few to no caveats.

How LVS-DR and LVS-TUN work..

Firstly, I feel I should explain exactly how LVS-DR and LVS-TUN actually work: they're very similar!

They're both forms of direct server return where the load balancer only has to deal with one half of the connection. Packets to the load balanced Virtual IP Address (VIP) arrive at the load balancer and then are load balanced to the chosen real server. However, packets from the real server back to the client bypass the load balancer, maximising egress throughput back to the client. How cool is that!

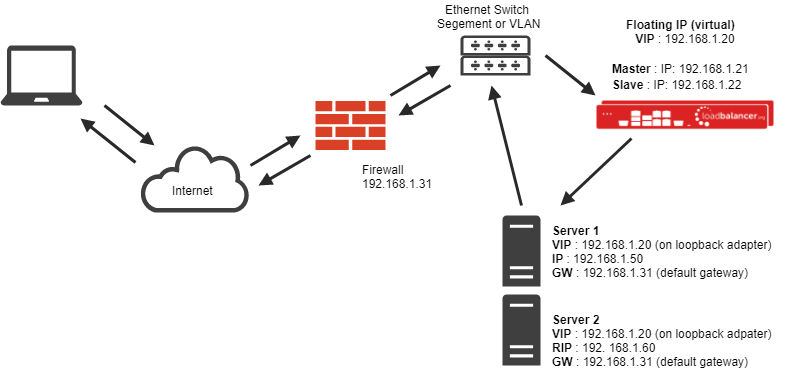

LVS-DR: This is the most common implementation, routing by MAC address at Layer 2. It's literally as shown above, with no router hops between the load balancer and the real servers. The destination IP remains the VIP, so the real server needs to be configured to receive traffic for the VIP address. This is usually achieved by using a loopback adapter with the VIP address configured - but there are also other methods such as iptables firewall rules.

LVS-TUN: This method fills the gap when a router hop is introduced, which makes routing by MAC address useless. Instead, it overcomes Layer 3 by wrapping the connection up in an IPIP tunnel on its way to the real server. The real server is configured with a TUN adapter set up with the VIP address, just like the loopback adapter method for LVS-DR!

Note: We also have a blog on a third method by Yahoo, L3 DSR.

LVS-NAT didn't work for my project because I wanted to load balance transparent web filters in AWS.

Explicit web filters are easy, but when you configure a proxy server to work transparently, it works as a router hop. It can still intercept and proxy/filter traffic, but this will be routed to the proxy server just as it would to a default gateway. Because the packet's destination will be the IP address of the destination requested (remote host such as www.example.com) and not a proxy server (as with explicit proxies) it certainly makes things a lot harder. NAT mode re-writes the destination address, which breaks routing to the actual endpoint - so you'd require a way to load balance without affecting the destination address at all. I would normally use LVS-DR or LVS-TUN to solve this problem in a normal LAN environment, but since I'd heard that these methods don't always work as well in AWS, I decided I needed to check.

Let's look at some of the scenarios tested

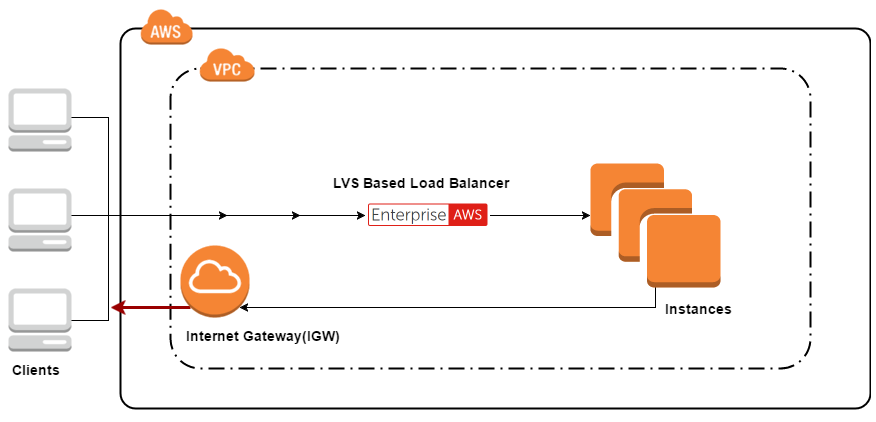

LVS DR, LVS TUN simple setup with external clients.

How cool would it be if you could do this?! But you can't.

For me this would have been the holy grail. If I could do this, LVS-DR/LVS-TUN could be used as freely as LVS-NAT mode to load balance almost anything that needs it. I found through experimentation that when I set a static route on the real server back to my client via the load balancer, it worked instantly! This proves to me that traffic was being dropped at the edge if it was not sourced from the correct instance.

So shall we give up right here? NO! Let's see if it works with any other topology.

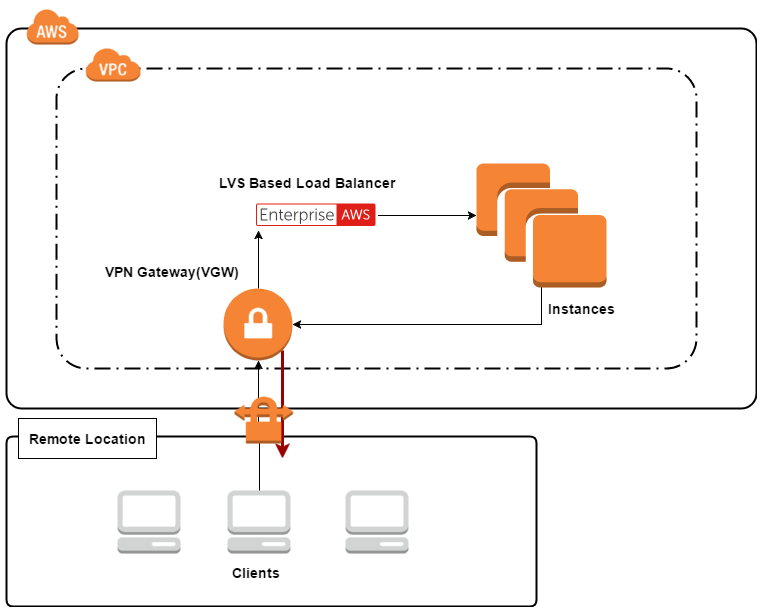

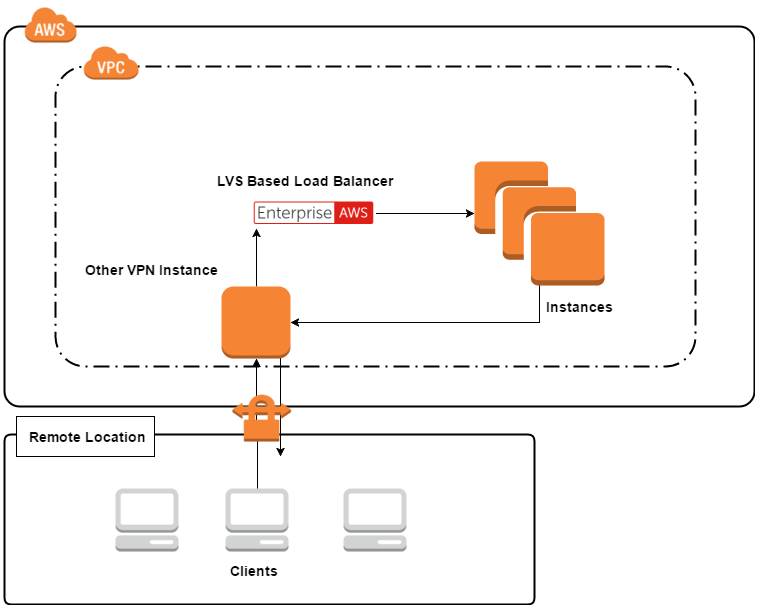

LVS DR, LVS TUN using Amazon VPN.

And

So it seems the edge Virtual Private Gateway (VGW) is as secure as its big brother, the Internet Gateway (IGW).

Will anything actually work in AWS in any mode other than NAT?

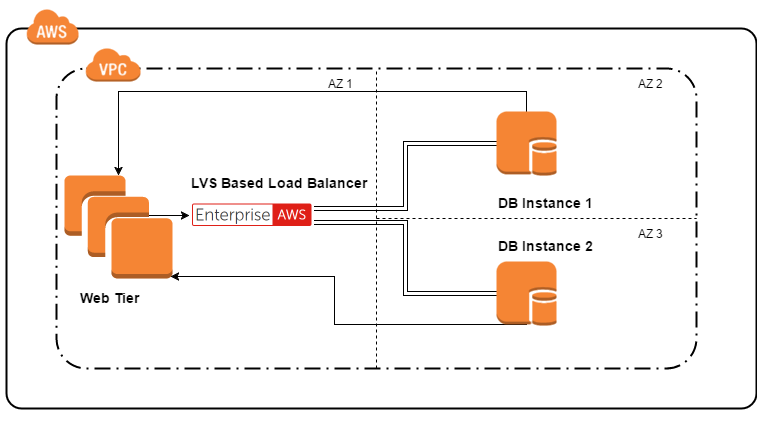

LVS DR, LVS TUN simple setup with internal clients.

BINGO!!!

Well, something works and something is better than nothing! This can now be used for multi-tiered applications at least. The most obvious example would be web servers with a database backend. You could use LVS-NAT or maybe even a reverse proxy like HAProxy (giving you Layer 7 features) for the HTTP/HTTPS client-side frontend traffic - but use super fast LVS-DR for the database cluster, relieving some load from the load balancer/director instance. Alternatively, use TUN mode so the database servers can additionally be in separate AZ's. But now we're getting ahead of ourselves...

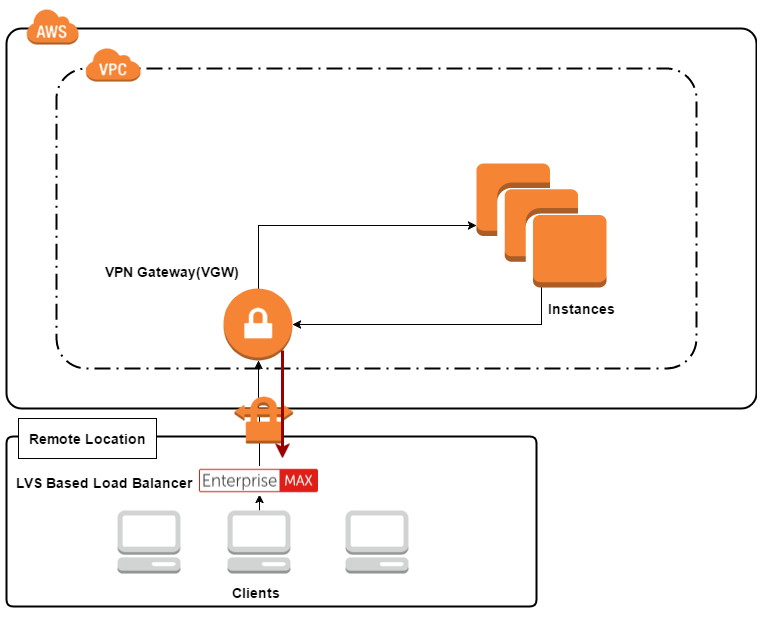

Okay, so this works too!

Using our own VPN instance we can successfully route traffic back to the client. I think this is actually pretty useful: the benefits of direct server return, and the only drawback is that you need to manage your own VPN instance(s).

I also tested this scenario with the load balancer in the remote site instead, but I had some issues. I was using pfSense for the remote VPN server, and this proved difficult when dealing with packets from locally connected subnets appearing on the wrong interface. It seemed to silently drop such packets, although this should still be theoretically possible when using another VPN server with appropriately relaxed settings. However, I decided that I didn't want to replace my pfSense box with a Linux router, on which I'd have known how to relax these settings. With pfSense it was as though the equivalent of the Linux rp_filter was turned up so it wouldn't allow packets from local sources on the wrong interface. This will likely be true of other VPN Servers/Firewalls, so you may need to investigate how to relax such settings if you plan to try this route.

LVS TUN 2 tiered application with a reverse proxy for the frontend across 3(or more) AZ's.

This is the other sort of configuration I think LVS-DR/LVS-TUN might prove useful for. You may want to host front-end applications in one AZ while backend applications are in another, as we mentioned above. It often makes sense to use such a configuration to take advantage of a fast L7 aware reverse proxy like HAproxy or Nginx for web traffic, while your database backend benefits from super fast direct server return.

Conclusion...

My project was interesting in that I got to learn what works and what doesn't.

I found that Amazon's edge is very secure and will drop what it sees as martian or spoofed packets (packets from a source IP address not that of the instance) at the Internet Gateway. I learned this also applies to Amazon's IPSec Virtual Private Gateway too. However, I was able to get more mileage out of using a homebrew VPN solution running on an instance within the VPC itself. I tried both LVS-DR and LVS-TUN with not just proxy servers but web servers too, so it was a fairly broad lab.

Did I get anything wrong? If I did or if the situation changes in AWS, please update me in the comments section below.

Friendly request to Amazon (if they're reading this blog): Please consider giving us a way to allow martian or spoofed packets to pass your edge when coming from a specific allowed IP address. I see this as similar to disabling source and destination checks on an instance, but at the IP level. This would allow much more scope to use direct server return in AWS which would be really great!