Load balancing Streaming Media

Benefits of load balancing streaming media

Load balancing streaming media offers these benefits:

- Enhanced performance and quality: Load balancing dramatically improves the viewer’s experience by distributing streaming requests across multiple servers, preventing any single server from becoming overwhelmed. By routing users to the least-congested and often geographically closest server (especially when integrated with a Content Delivery Network or CDN), the time it takes for data to reach the viewer is reduced. This minimizes the dreaded buffering and ensures a smoother, more responsive start to the stream.Traffic is intelligently spread, ensuring that server resources (CPU, memory, bandwidth) are used efficiently, which in turn helps maintain the delivery of high-quality video and audio streams.

- High Availability (HA): Load balancing ensures that the streaming service remains operational and consistently available, even when unexpected problems occur. Load balancers constantly monitor the health of all streaming servers. If a server fails, slows down, or becomes unresponsive, the load balancer automatically and instantly redirects new and existing traffic to the remaining healthy servers. This prevents a single point of failure from causing a service outage. By having multiple redundant servers ready to take over, the system achieves fault tolerance, guaranteeing higher uptime and a more reliable service for viewers.

- Seamless scalability: For streaming services, which often experience unpredictable and massive traffic spikes (like during a live event or viral content release), load balancing is essential for growth. The load balancer allows streaming providers to easily add or remove servers based on real-time demand. When traffic surges, new servers can be brought online instantly and the load balancer automatically includes them in the distribution pool, ensuring consistent service quality without manual intervention. This dynamic scaling ability means resources can be scaled down during off-peak hours, optimizing infrastructure usage and reducing operational costs.

This powerful videos demonstrates the positive impact a load balancer can have on the user’s streaming experience:

About streaming media

Streaming media is video or audio content sent in compressed form over the Internet and played immediately, rather than being saved to the hard drive. With streaming media, a user does not have to wait to download a file to play it. Because the media is sent in a continuous stream of data it can play as it arrives. Users can pause, rewind or fast-forward, just as they could with a downloaded file, unless the content is being streamed live.

Why Loadbalancer.org for streaming media?

Loadbalancer’s intuitive Enterprise Application Delivery Controller (ADC) is designed to save time and money with a clever, not complex, WebUI.

Easily configure, deploy, manage, and maintain our Enterprise load balancer, reducing complexity and the risk of human error. For a difference you can see in just minutes.

And with WAF and GSLB included straight out-of-the-box, there’s no hidden costs, so the prices you see on our website are fully transparent.

More on what’s possible with Loadbalancer.org.

How to load balance streaming media

The load balancer can be deployed in 4 fundamental ways: Layer 4 DR mode, Layer 4 NAT mode, Layer 4 SNAT mode, and Layer 7 Reverse Proxy (Layer 7 SNAT mode).

For streaming media, using Layer DR mode is recommended.

About Layer 4 DR mode load balancing

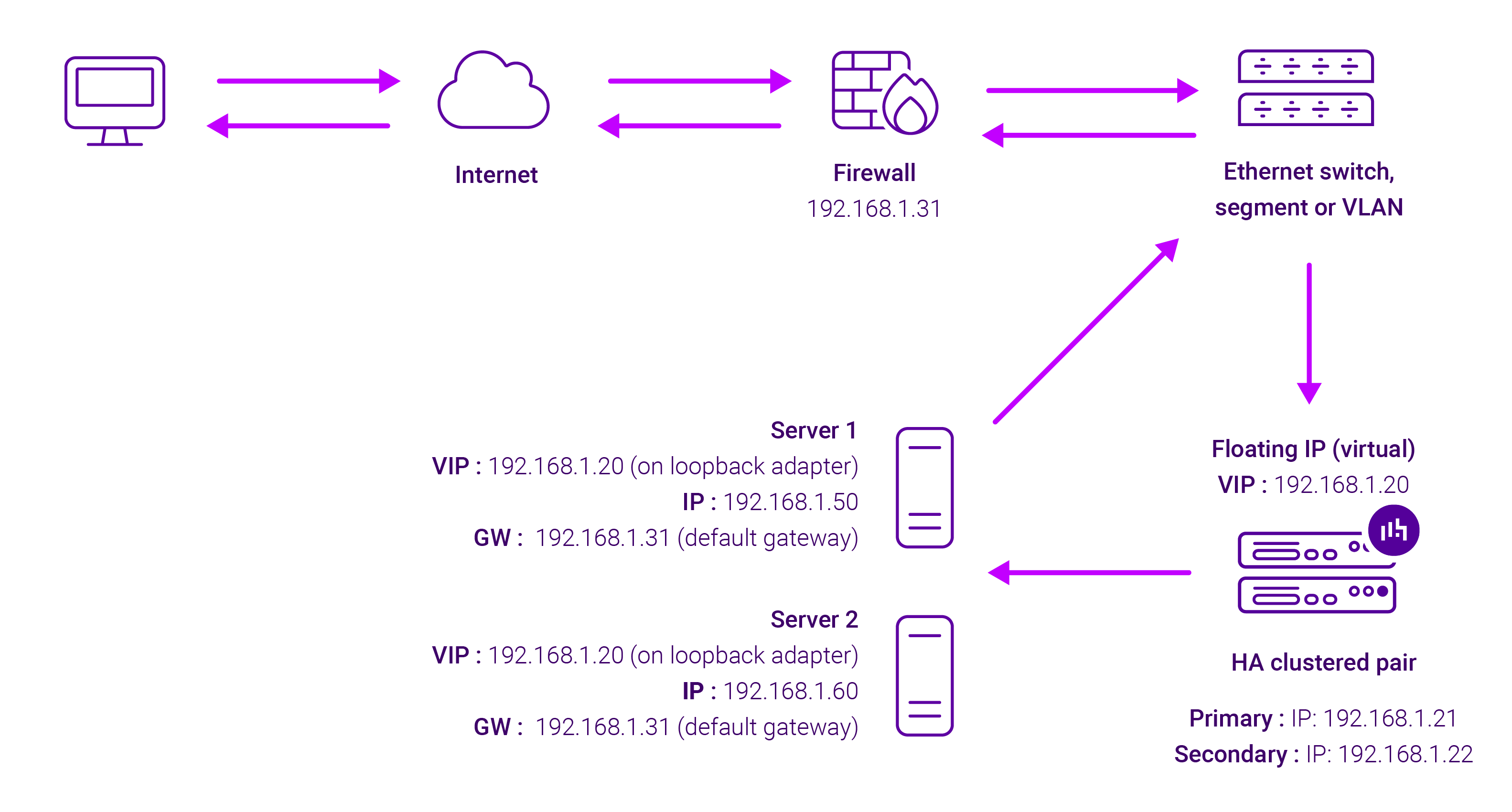

One-arm direct routing (DR) mode is a very high performance solution that requires little change to your existing infrastructure.

DR mode works by changing the destination MAC address of the incoming packet to match the selected Real Server on the fly which is very fast.

When the packet reaches the Real Server it expects the Real Server to own the Virtual Services IP address (VIP). This means that you need to ensure that the Real Server (and the load balanced application) respond to both the Real Server’s own IP address and the VIP.

The Real Servers should not respond to ARP requests for the VIP. Only the load balancer should do this. Configuring the Real Servers in this way is referred to as Solving the ARP problem.

On average, DR mode is 8 times quicker than NAT for HTTP, 50 times quicker for Terminal Services and much, much faster for streaming media or FTP.

The load balancer must have an Interface in the same subnet as the Real Servers to ensure Layer 2 connectivity required for DR mode to work.

The VIP can be brought up on the same subnet as the Real Servers, or on a different subnet provided that the load balancer has an interface in that subnet.

Port translation is not possible with DR mode, e.g. VIP:80 → RIP:8080 is not supported. DR mode is transparent, i.e. the Real Server will see the source IP address of the client.