Rate limiting any kind of application is deceptively simple, and yet incredibly dangerous.

You can easily lock users out of a system by using Quality of Service (QoS) rules that are too tight. And you don't want to accidently cause a Denial Of Service attack on your own customers!

So what's the solution? Here we explain how to strike the right balance, using Hitachi Vantara as an example.

Why do MSPs need to rate limit Hitachi Vantara object storage anyway?

When you're providing object storage as a shared service, rate limits help ensure that no single user or application can overwhelm the system.

Object storage by nature is incredibly scalable, and unlikely to break. However, as an MSP you will incur dramatically different costs for servicing users if you don't apply some kind of rate limit or quota system.

What kind of things should we rate limit?

The biggest impact on an object storage system is writing files — that's why load balancers are often configured to ensure that buckets or files are always read/written from the same part of the cluster. Otherwise the cluster can waste a lot of resources moving files around to re-balance and keeping copies across regions for high availability.

We probably also want to implement generic DOS protection rules against API endpoints, or limit individual customers at different rates. But I've covered generic DOS rules for HAProxy before, so won't repeat myself here.

So instead I'm going to focus this blog on the slightly trickier problem of rate limiting individual object storage buckets...

Why is rate limiting object storage buckets tricky?

One of the main reasons that rate limiting buckets is a bit tricky, is that they can be referenced in two completely different ways.

The S3 object storage model is very flexible so it is quite happy for you to reference a file in one of two ways:

- The host method, i.e. https://mybucket.mysite.com/myfile

or - The URL method, i.e. https://mysite.com/mybucket/myfile

Now wouldn't it be great if you could have the same rate limit on both methods...?

Well the good news is that, yes, you can! It's just a little bit fiddly. But don't worry, I'll walk you through it.

The trick is to make sure that you figure out which method is being used, then create a stick table that matches not only the bucket name, but also the source IP of the client.

How to rate limit object storage buckets with HAProxy

Here is the complete HAProxy configuration for rate limiting S3 buckets:

# Rate limit S3 buckets by source IP with host or url access style

# First get the source ip address

http-request set-var(req.srcip) src

# Get the host based bucket name as string (assuming bucket.mysite.com/file)

http-request set-var(req.buckethost) req.hdr(host),field(1,'.'),lower

# Get the URL Path based bucket name as string (assuming mysite.com/bucket/file)

http-request set-var(req.bucketurl) path,field(2,'/'),lower

# Combine them with the src ip address to make a key that can match both the ip & bucket

http-request set-var(req.buckethost.ip) var(req.buckethost),concat(,req.srcip)

http-request set-var(req.bucketurl.ip) var(req.bucketurl),concat(,req.srcip)

# Make sure you stick on the just the host bucket + IP

# Why? Because otherwise it would match the url style:

# i.e. mysite.com/bucket/file would get matched as if mysite was the bucket!

http-request track-sc0 var(req.buckethost.ip) table Abuse if { var(req.buckethost.ip) -i mysite }

# Only stick on the URL bucket if you can see a filename...

# Why? Because otherwise it would match the host style:

# i.e. bucket.mysite.com/file would get matched as if file was the bucket!

http-request set-var(req.path_is_file) path,field(3,'/')

http-request track-sc0 var(req.bucketurl.ip) table Abuse if { var(req.path_is_file) -m len ge 1 }

# Check the Abuse table, and display a 429 slow down error message

acl abuse sc0_http_req_rate(Abuse) ge 2

http-request deny deny_status 429 if abuse

# The value of 2 is is just for testing, You would obviously use a much higher threshold than 2 requests every 10 seconds!

# CREATE A BACKEND TO HOLD THE ABUSE TABLE

backend Abuse

stick-table type string len 64 size 100k expire 3m store http_req_rate(10s)

Test the URL Bucket method with:

http://192.168.1.74/test/test2Then check the stick table shows the expected values:

echo "show table Abuse" | socat unix-connect:/var/run/haproxy.stat stdio

# table: Abuse, type: string, size:51200, used:1

0xe290e0: key=test192.168.1.123 use=0 exp=1709199 http_req_cnt=1 http_req_rate(10000)=0To test the hostname method I edited my host's file to map 192.168.1.74 to test.mysite.com and then used:

https://test.mysite.com/test2Then re-check the stick table:

echo "show table Abuse" | socat unix-connect:/var/run/haproxy.stat stdio

# table: Abuse, type: string, size:51200, used:1

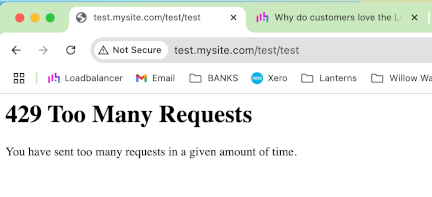

0xe290e0: key=test192.168.1.123 use=0 exp=1709199 http_req_cnt=2 http_req_rate(10000)=0And if I hit the page several times, I then get blocked for abuse:

Which is exactly what we're after. Result!

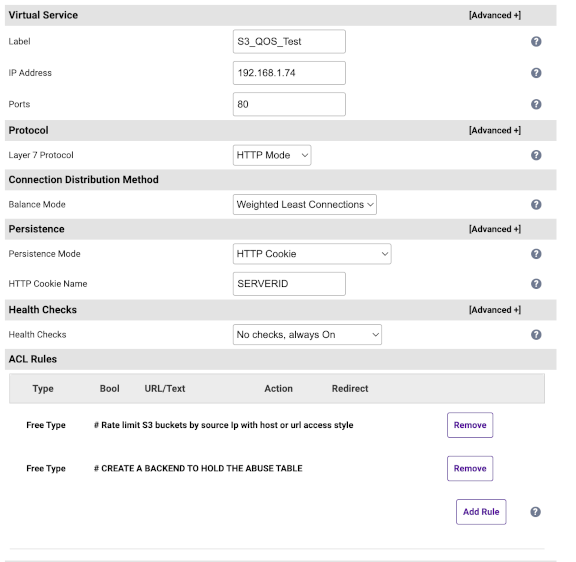

Here at Loadbalancer, we love to remove complexity

This kind of thing is, by nature, slightly complex. But as long as you think things through logically, it's actually quite simple and reliable to implement.

And, if you want to make things even easier, you can simply use the Free Type ACLs on our Enterprise ADC to implement these complex rules at the touch of a button:

But, always remember — don't DOS your own application!

If you're at all unsure about any of the above, we strongly recommend that you talk to us about your exact requirements before putting something like rate limiting into production. We're in conversations like this with customers all the time, and often find an unexpected outcome, or edge case that's caused a problem.

Either way, if you're an MSP you need to make sure that all your customers are treated fairly. And therefore implementing Quality Of Service rules is a must. So, if you want to get it right first time, why not talk to the experts?