It sounds like a very simple question, and yet it causes a lot of confusion. In this blog, I’ll dig into the question a bit further and I’ll also try to highlight facts and evidence rather than opinion. Although no doubt I’ll fail, as I’m just as biased as every other engineer I’ve ever met…

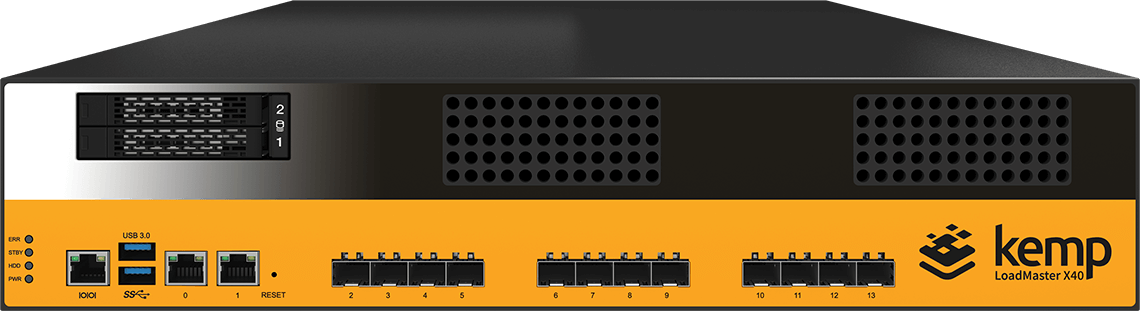

The Kemp LM-X40 is a great piece of kit with 40 Gbps throughput and 12 x 10Gb SFP+ ports.

Let’s deal with the simple questions first:

Do I need one network port per application?

No. You can have as many applications as you like on one physical network port.

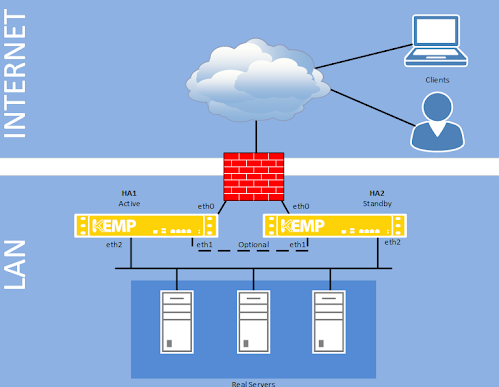

But, surely a two-arm load balancer configuration needs two physical ports?

No. You can have as many subnets and VLANs as you like on one physical port. It's a common misconception because most engineers show two ports in use to make the logical network more obviously relatable to a physical one (I’m definitely guilty of that).

But, surely I need physical separation of networks for security reasons?

No. It's not 1992! Given that most applications are now hosted in virtual hypervisors on software-defined networks — I can't believe people still ask this question. Do you seriously think Amazon AWS has physical network separation?

The only real reason for separate network ports is physical redundancy. Because the other primary reason (security segregation) can be easily implemented using VLANs. Logical network segregation with VLANs is really useful — but don’t get me started on why I hate the entire concept of DMZ’s…

What about a management port though? That's more secure, right?

No. Do you think Amazon uses physical management ports in its software-defined network? People make a lot of noise about physical security, which may have some benefit in a desktop environment where many people have physical access to your network ports. Heck, you can even force all your office networks into the public cloud and require everyone to use VPNs, or faff around with 802.1X...But seriously folks, we’re talking about the data center here. If someone has physical access and you don't trust them, then you're in serious trouble!

But, my consultant/vendor/manager/friend told me that I need two ports?

They are wrong.

So before I move on to the more important questions, it's probably a good idea to acknowledge that preference, consistency, and tradition play an important part in the common request for more ports. And I definitely agree that in very simple environments with one or two applications then it can be nice for your physical configuration to look a bit like your logical configuration:

It makes it nice and simple to label the network cables with things like: "My critical app - don't touch!". You often see this kind of configuration in smaller businesses with less than 20 servers.

However, in the real world, the Kemp LM-X40 is an expensive piece of enterprise kit:

It’s highly likely that if you have forked out $60K+ for a pair of these load balancers, you are probably using them for more than a simple test lab on your desk. It's almost certain that you will be plugging them into a nicely managed server rack deep in your enterprise data center. The main purpose of a load balancer is high availability, so you will almost certainly be plugging them into a highly-available dual switch fabric.

So, let’s quickly move on to some more complex scenarios...

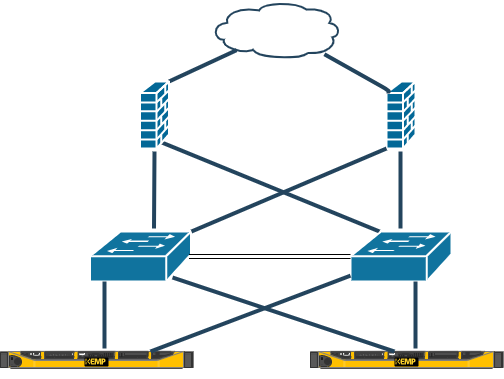

What if I have a redundant dual switch fabric, do I need two network ports?

Yes! Finally a good reason to have multiple ports because, Guess what — We need TWO physical ports if we have TWO switch fabrics.

But what if I have two redundant switch fabrics, do I need four network ports?

Well yes but... No! Who on earth has two separate redundant switch fabrics?

What you have is someone getting confused between physical network diagrams and logical network diagrams. Which is both understandable and very common, but sad. Remember, if in doubt, the diagram is probably logical rather than physical these days.

So, getting back to the point... What could I do with my 12 physical 10G ports?

Let’s have a look at a completely fabricated example....

Object Storage App:

- 4 x 10G NICs - 802.3ad bonded across two switches

Exchange Cluster:

- 2 x 10G NICs - 802.3ad bonded across two switches

Remote Desktop Services Cluster:

- 2 x 10G NICs - 802.3ad bonded across two switches

Isolated Development Cluster:

- 2 x 10G NICs - 802.3ad bonded across two switches

High Performance App2:

- 4 x 10G NICs - 802.3ad bonded across two switches

Ooops, I need 2 more ports for that! And, wait a minute, I hit my 40G bandwidth capacity with just the object storage app!

OK, I’m being a bit daft here, but do you see my point?

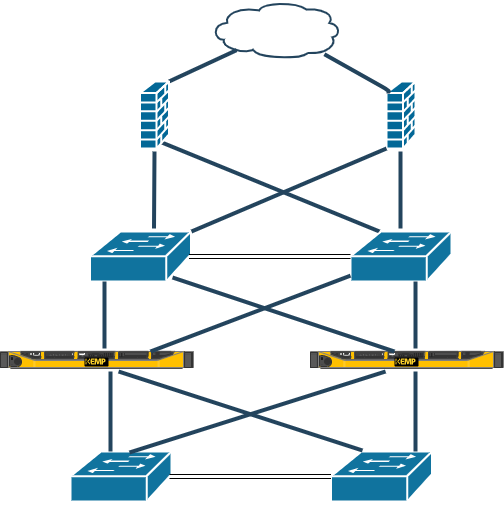

Any attempt to use physical separation of networks in an enterprise load balancing environment quickly runs into trouble.

It would be so much easier if you just bonded a pair of 40G NICs over two switch fabrics and used as many clusters as you like — until you’ve used 100% of the load balancer's physical bandwidth capacity.

But then we come to the real problem... What are you plugging the load balancer into?

Sounds like a simple question doesn’t it? But have you currently got a 40G QSFP+ switch fabric or a 10/25G SFP28 switch fabric? And if so, which Kemp model do you choose?

- The Kemp XHC-40G with 4x 40G QSFP+ ports?

OR

- The Kemp XHC-25G with 4x 25G SFP28 ports?

After all, the sockets are a completely different size!

And what if you change your mind?

Wait a minute… Maybe that's why they give you lots of 10G ports? Just in case you've got the wrong uplink connector size? And turned your load balancer into a very expensive doorstop.

Why less is better when it comes to network ports:

For the ultimate flexibility and performance, you should have LESS network ports on your load balancer, NOT MORE.

- Because as I’ve already discussed the maximum number of ports that you need is almost certainly 2.

- It’s way less confusing to figure out the physical wiring.

- Your Kernel, PCI bus, network card offloading, and switch fabric all operate at peak performance.

- You don’t waste bonding capacity on your switch fabric, trying to bond 8x 10G instead of 2x 100G.

- And you can always simply change the network card if you need to move between 40G QSFP+ <--> 25G SFP28.

And that's why our top-end load balancer comes with only 2x 100G QSFP28 network ports:

Although, as you can see below, we also offer 4x 25G SFP28 as an option (or 4x 40G / 2x 50G). And I’m conveniently ignoring the onboard RJ-45 ports.

So technically I’m lying — But I’m trying to make a point!

No doubt, many people will think I’m wrong — and I’d love to hear why

So please tell me how wrong I am in the comments section. I genuinely want to know. Because, if anyone has a convincing argument I’ll be straight down to the product development department demanding a product with more network ports!