Load balancing Red Hat OpenShift

Benefits of load balancing RedHat OpenShift

Load balancing is essential to a well-functioning Red Hat OpenShift cluster, offering three key benefits:

- Improved performance and scalability: Load balancing evenly distributes incoming traffic across multiple application instances (known as pods). This prevents any single pod from becoming overwhelmed, allowing your application to handle a high volume of user requests. It also makes your application more scalable; as you add more pods to the cluster, the load balancer automatically includes them in the traffic distribution, helping your services stay responsive even during traffic spikes.

- High availability and resilience: Load balancers continuously monitor the health of the pods they are directing traffic to. If a pod goes offline or becomes unresponsive, the load balancer stops sending traffic to it and reroutes user requests to the healthy pods. This provides high availability and automatically isolates failed instances, creating a self-healing system that remains available and resilient.

- Easy external access: Load balancing in OpenShift simplifies how external clients access applications. It provides a single point of entry, like a virtual IP address or hostname, so clients don’t need to know the individual addresses of every pod. Instead, the load balancer intelligently routes requests to the correct pods, making your network architecture easier to manage.

About Red Hat OpenShift

RedHat OpenShift is a family of containerization software products, which includes the popular OpenShift Container Platform — a hybrid cloud platform as a service built around Linux containers orchestrated and managed by Kubernetes on a foundation of Red Hat Enterprise Linux.

The product family also includes OpenShift Virtualization, a scalable, enterprise-grade platform for running and managing virtual machines alongside or instead of container workloads.

RedHat OpenShift is a container orchestration tool built on top of Kubernetes, and should most definitely not be confused with Docker! More on OpenShift v Docker.

Why Loadbalancer.org for RedHat OpenShift?

Loadbalancer’s intuitive Enterprise Application Delivery Controller (ADC) is designed to save time and money with a clever, not complex, WebUI.

Easily configure, deploy, manage, and maintain our Enterprise load balancer, reducing complexity and the risk of human error. For a difference you can see in just minutes.

And with WAF and GSLB included straight out-of-the-box, there’s no hidden costs, so the prices you see on our website are fully transparent.

More on what’s possible with Loadbalancer.org.

How to load balance RedHat OpenShift

The load balancer can be deployed in four fundamental ways: Layer 4 DR mode, Layer 4 NAT mode, Layer 4 SNAT mode, and Layer 7 Reverse Proxy (Layer 7 SNAT mode).

For RedHat OpenShift, Layer 7 Reverse Proxy is recommended.

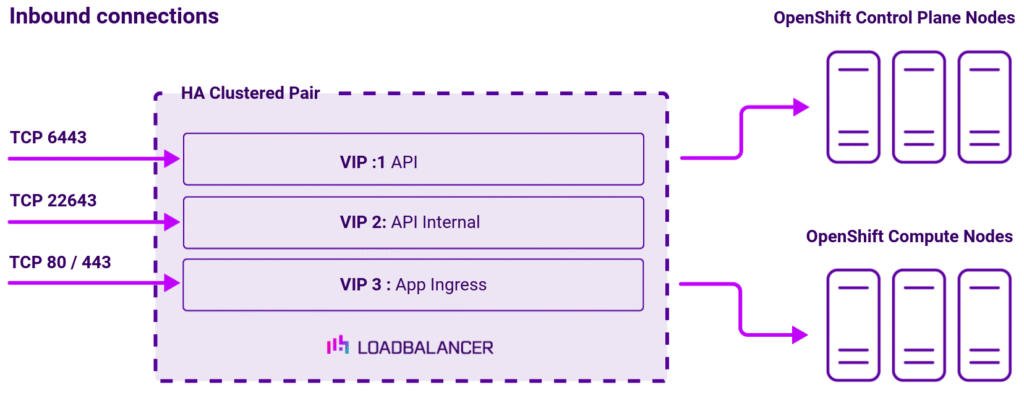

Virtual service (VIP) requirements

To provide load balancing and HA for RedHat OpenShift, the following VIPs are required:

| Ref | VIP name | Mode | Port(s) | Health check |

|---|---|---|---|---|

| VIP 1 | API | Layer 7 Reverse Proxy (TCP) | 6443 | HTTP (GET) |

| VIP 2 | API Internal | Layer 7 Reverse Proxy (TCP) | 22634 | HTTP (GET) |

| VIP 3 | App Ingress | Layer 7 Reverse Proxy (TCP) | 80/443 | HTTP (GET) |

Load balancing deployment concept

Once the load balancer is deployed, clients connect to the Virtual Services (VIPs) rather than connecting directly to one of the Red Hat OpenShift servers. These connections are then load balanced across the Red Hat OpenShift servers to distribute the load according to the load balancing algorithm selected.

Note

The load balancer can be deployed as a single unit, although Loadbalancer.org recommends a clustered pair for resilience and high availability.

Load balancing topology

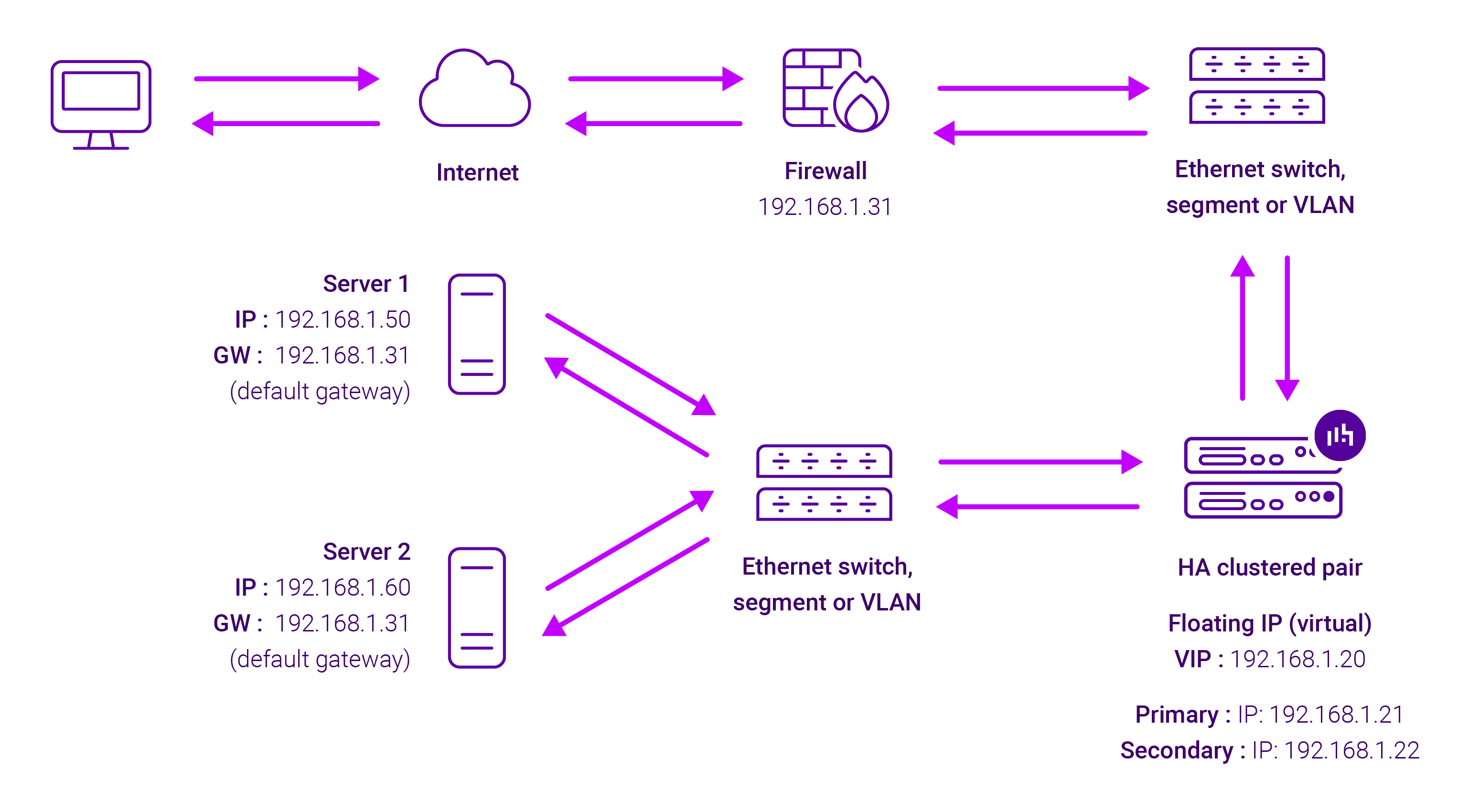

Layer 7 Reverse Proxy can be deployed using either a one-arm or two-arm configuration. For two-arm deployments, eth1 is typically used for client side connections and eth0 is used for Real Server connections, although this is not mandatory since any interface can be used for any purpose.

For more on one and two-arm topology see Topologies & Load Balancing Methods.

About Layer 7 Reverse Proxy load balancing

Layer 7 Reverse Proxy uses a proxy (HAProxy) at the application layer. Inbound requests are terminated on the load balancer and HAProxy generates a new corresponding request to the chosen Real Server. As a result, Layer 7 is typically not as fast as the Layer 4 methods. Layer 7 is typically chosen when either enhanced options such as SSL termination, cookie based persistence, URL rewriting, header insertion/deletion etc. are required, or when the network topology prohibits the use of the Layer 4 methods.

The image below shows an example Layer 7 Reverse Proxy network diagram:

Because Layer 7 Reverse Proxy is a full proxy, Real Servers in the cluster can be on any accessible network including across the Internet or WAN.

Layer 7 Reverse Proxy is not transparent by default, i.e. the Real Servers will not see the source IP address of the client, they will see the load balancer’s own IP address by default, or any other local appliance IP address if preferred (e.g. the VIP address).

This can be configured per Layer 7 VIP. If required, the load balancer can be configured to provide the actual client IP address to the Real Servers in 2 ways. Either by inserting a header that contains the client’s source IP address, or by modifying the Source Address field of the IP packets and replacing the IP address of the load balancer with the IP address of the client. For more information on these methods, please refer to Transparency at Layer 7 in the Enterprise Admin Manual.