Benefits of load balancing Scality RING

Enterprises that use the AWS S3 protocol for cloud-native access to on-premises archive data from Scality RING, achieve more consistent, balanced performance when using a load balanced workflow.

On Scality RING, our load balancer enables:

- A highly available storage endpoint, that distributes clients across backend nodes, taking nodes that fail health checks out of service: This minimizes downtime, improves resilience, and simplifies maintenance.

- SSL passthrough (for unencrypted object operations): This ensures data protection for public connections.

- Global Server Load Balancing (GSLB) for multi-site deployments: This improves resilience, optimizes network performance and ensures high availability for distributed environments.

- Exporting logs and performance analytics: Prometheus integration to provide more in-depth logging.

Scality RING architecture supports High Availability (HA) clustering by putting a load balancer in front of it. Load balancers monitor and perform health checks on a node to ensure traffic is routed correctly to healthy nodes. Without the use of a load balancer, an off-line or failed node would still receive traffic, causing failures.

About Scality RING

Scality RING software deploys on industry-standard x86 servers to store objects and files whilst providing compatibility with the Amazon S3 API.

Scality recommends that RING be deployed with Loadbalancer.org on standard hardware for a pre-validated integration, step-by-step RING deployment guide and mutual product support.

You can also purchase the integrated Loadbalancer and Scality solution via HPE to streamline acquisition. We have small, medium or large SKUs, depending on your throughput requirements (product datasheet).

Through HPE we also offer virtual appliances which can be hosted on the customer’s own hypervisor, with the following minimum configuration:

- 2 vCPUs

- 4GB RAM

- 20GB storage

Why Loadbalancer.org for Scality RING?

Our long-standing partnership with Scality means this deployment guide isn’t theoretical; it has been field tested and rigorously validated by Scality experts. This means you can be completely confident that the solution described is robust, reliable, and backed by the real-world operational experience of a global leader in healthcare technology.

There are many different load balancers on the market, with different features, levels of support, and associated costs, so why do Scality recommend us?

- Partnerships – We have a proven track record with Scality, HPE and their partner network

- Experience – We have 20+ years’ experience optimizing complex object storage environments

- Intuitive WebUI – With our easy to use interface means you can deploy faster and manage your load balancer more easily

- High availability – Our Enterprise ADC provides S3 connector failover, storage server failover, advanced health checks, and multi-site failover

- Performance – Our load balancer can balance data load, and offers Direct Server Return, as well SSL offloading

- Scalability – We offer a number of different load balancer scaling and performance options for increased throughput, as well as a Site License that allows you to spin up as many load balancers as you like, at no extra cost

- Integrated GSLB – With our appliance GSLB comes as standard, straight out of the box, at no extra cost

- Validation – Our solution and step-by-step deployment guide comes pre-validated by Scality

- Tierless Support – Consultative, same-day, tireless support comes as standard

- No End-of-Life – Loadbalancer.org does not End-of-Life its virtual appliances, putting customers back in control of their product lifecycle

For more on the Scality and Loadbalancer partnership, check out these resources:

Loadbalancer optimizes S3 networks for HPE with Scality

Scality, HPE and Loadbalancer take the object storage market by storm

How to load balance Scality RING

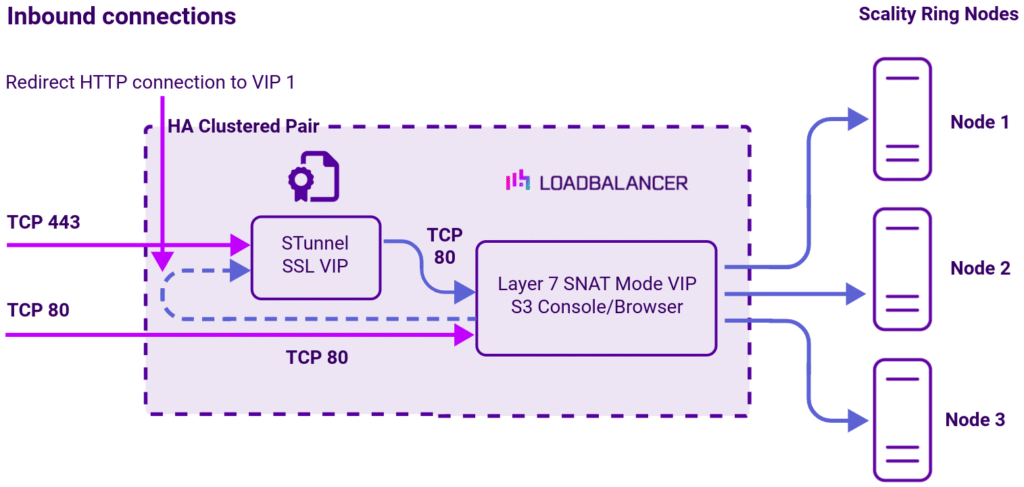

The load balancer can be deployed in four fundamental ways: Layer 4 DR mode, Layer 4 NAT mode, Layer 4 SNAT mode, and Layer 7 Reverse Proxy (Layer 7 SNAT mode).

A variety of load balancing methods are currently supported by Scality RING, depending on use case requirements, including Layer 4, Layer 7, and GSLB.

Virtual service (VIP) requirements

To provide load balancing for Scality RING the following VIP is required:

- S3: handles requests from S3 client applications via HTTP and HTTPS

In addition, the following optional backend only VIPs may be required:

- S3_Browser: handles browser requests from S3 client applications via HTTP and HTTPS

- S3_Console: handles console requests from S3 client applications via HTTP and HTTPS

These VIPs are required if browser and console traffic must be routed separately. If configured, ACL rules must also be added to the S3 Virtual Service to direct the traffic to the correct backend VIP.

Load balancing deployment concept

To achieve the best level of performance and throughput when load balancing a Scality RING deployment, the Loadbalancer.org appliance should be configured to actively use multiple CPU cores for the load balancing process. This must be considered when initially deploying and sizing virtual appliances. A virtual host should be allocated a minimum of 4 vCPUs.

The RING service that should be load balanced is the S3 component.

Full, step-by-step instructions on how to do this can be found in this deployment guide: Load balancing Scality RING.

For advice on scaling Scality RING deployments for increased throughput refer to technical guide:

Load balancing options for scalable high-performance computing, storage, and AI workloads.