Some time ago, we blogged about why we still love DSR. It’s a load balancing method which goes by many names: direct server return (DSR), direct routing (DR), LVS/DR, and nPath routing - to name a few.

Why Loadbalancer.org for Layer 4?

The Engineers' choice for smarter load balancing

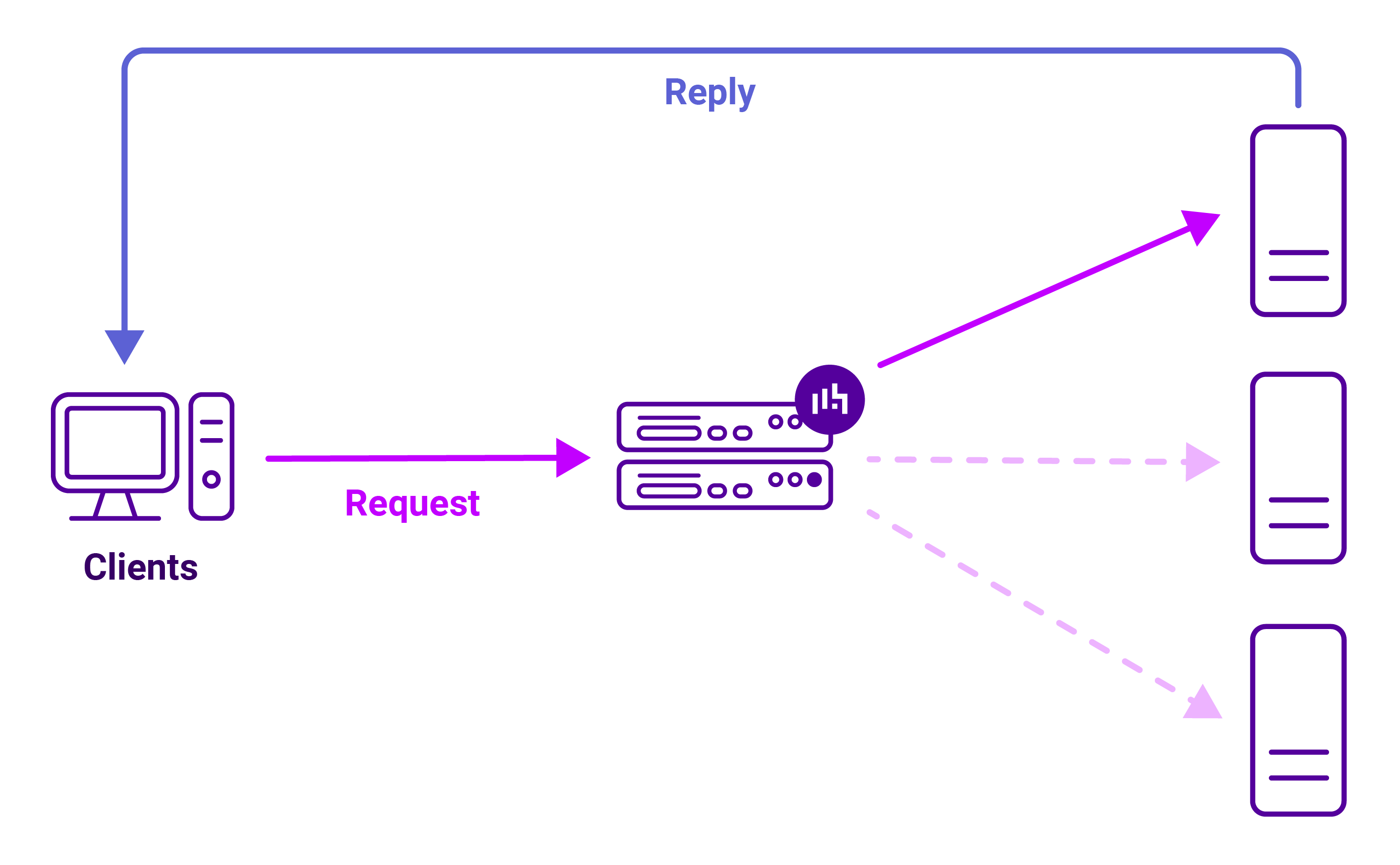

The idea is the same: reply traffic flows from the servers straight back to the clients. This means that the load balancer is bypassed on the return journey. Using DSR maximizes the throughput of return traffic and allows for near endless scalability.

A year and a bit since we published our previous blog post on the matter, we have a small update: we still love DSR, but…

It’s still often misunderstood

It’s still the case that DSR doesn’t always get a fair hearing, often because it isn't fully understood. That’s certainly something we can help with - we’ve produced a handy dandy video overview of DSR and how it works. We specifically cover 'layer 4 DR mode' which is the name of our load balancer’s DSR implementation. Check it out below:

It’s also true that some load balancer vendors use fear, uncertainty, and doubt to try and discredit the idea of DSR in favor of more complex solutions. The alternatives are almost certainly less efficient and require more computing power, leading to more expensive hardware requirements. While alternative load balancing methods do tend to offer more features, if the added features aren’t needed or used then it starts to look more like an exercise in upselling.

It works great in tandem with Layer 7 load balancing

While we’ve discussed this subject before in a blog post on load balancing your load balancers, it’s worth mentioning again as it’s a very cool concept used by some of the world’s largest organisations.

Using a two-tiered load balancing model, it’s possible to serve far greater volumes of traffic than would otherwise be possible. An initial tier of layer 4 load balancers (DSR is a perfect solution here) distributes inbound traffic across a second tier of layer 7, proxy-based load balancers. Splitting up the traffic in this way allows the more computationally expensive work of the proxy load balancers to be spread across multiple nodes. The whole solution can be built on commodity hardware and scaled horizontally over time to meet changing needs.

One of the best talks we saw during our visit to the HAProxy Conference 2019 was by Joe Williams from GitHub who talked about using a multi-tiered load balancing approach with DSR, tunnel-based load balancing at the front end. It’s a great example of the clever things that can be done using DSR, especially when the underlying infrastructure can be optimized and tinkered with. The talk is currently available on YouTube and is well worth a watch.

It’s a rock solid solution for object storage

We’ve seen the demand to load balance object storage solutions explode over the past few years. Layer 7 based solutions are undoubtedly simple and flexible, but DSR / layer 4 DR mode still has unbeatable performance. In particular, we've found DR mode useful for read-heavy object storage deployments due to the large reply traffic to request traffic ratio. The much larger volume of reply (read) traffic bypasses the load balancer and is not constrained by the load balancer’s network throughput.

Our conclusion

Traditional layer 4 load balancing is showing no signs of disappearing any time soon. Layer 7 solutions are absolutely becoming ever faster, more mature, and increasingly feature-packed with each passing year. Even so, our position on DSR remains as consistent as ever: we still love and recommend it to our customers for its unbeatable performance, simplicity, and consistency.